Beyond Entities: A Modern Guide to Relation Extraction with LLMs

- Daniel Pichardo

- Artificial intelligence , Nlp

- October 17, 2025

Table of Contents

Introduction

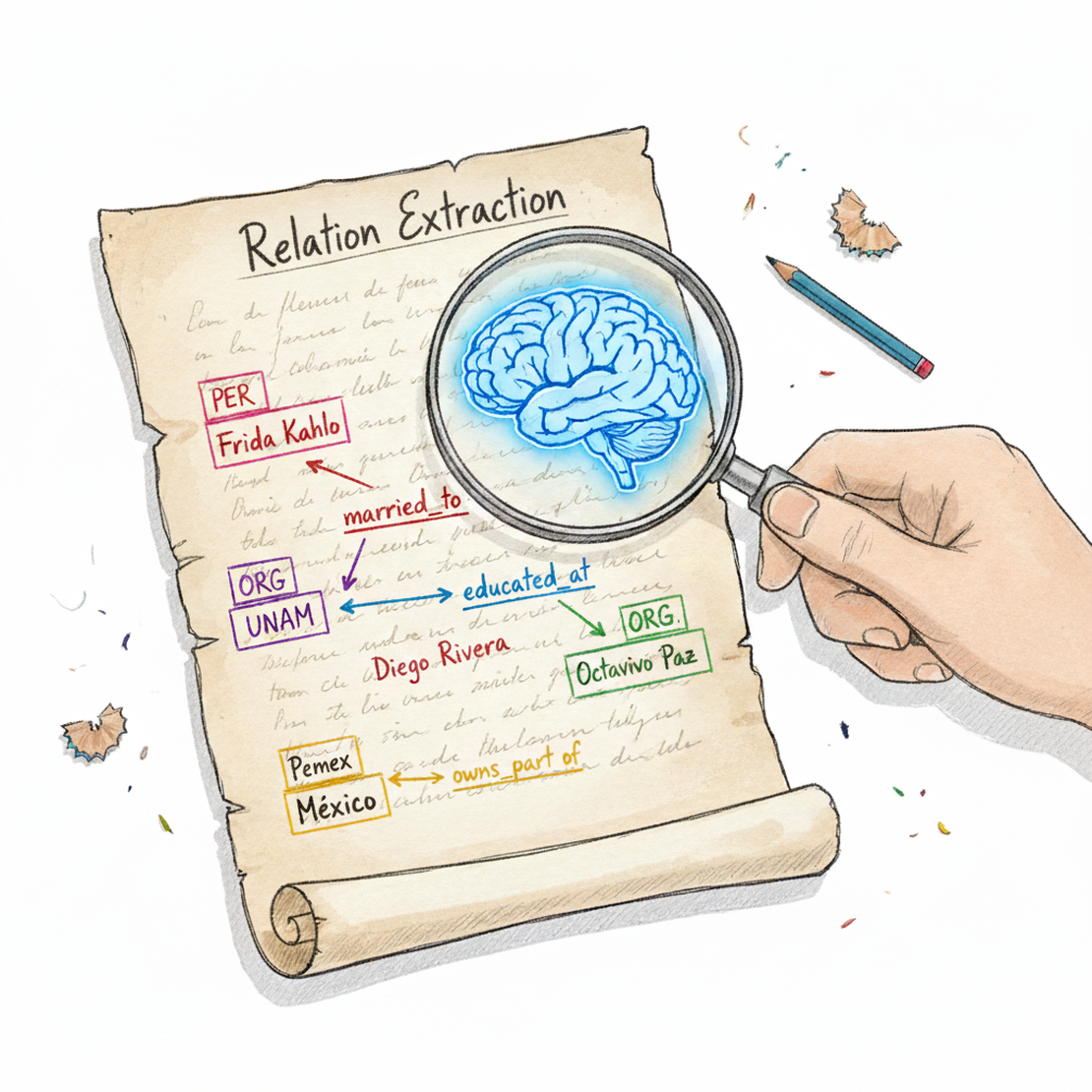

Relation Extraction (RE) is a critical NLP task focused on identifying and classifying semantic relationships between named entities in text. Moving beyond merely identifying entities, RE seeks to understand the connections and interactions between them, forming the bedrock of knowledge graph construction, information retrieval, and sophisticated question-answering systems. The integration of pre-trained Large Language Models (LLMs) has profoundly transformed RE, enabling more accurate and nuanced understanding of relational semantics. This guide offers an in-depth exploration of RE with modern LLMs, covering theoretical foundations, practical methodologies, and advanced considerations for researchers and practitioners.

What is Relation Extraction (RE)?

Relation Extraction involves finding a semantic relationship between two or more entities in a given text. While Named Entity Recognition (NER) identifies what the key pieces of information are, RE determines how these entities are connected.

Consider the sentence:

"Apple was founded by Steve Jobs in Cupertino."

An NER system would identify:

Apple: ORGSteve Jobs: PERCupertino: LOC

A Relation Extraction system would go further, identifying relationships such as:

- (

Apple,was founded by,Steve Jobs) ->ORG-FOUNDER-PER - (

Apple,in,Cupertino) ->ORG-LOCATED_IN-LOC

These structured relationships are invaluable for building knowledge graphs, populating databases, and enabling more intelligent information systems.

Types of Relationships

Relationships can be categorized in several ways:

Intra-sentential vs. Inter-sentential:

- Intra-sentential: The entities and their relationship are expressed within a single sentence. This is the most common and often simpler case.

- Inter-sentential: The entities and their relationship are spread across multiple sentences, requiring broader contextual understanding. This is significantly more challenging.

Pre-defined vs. Open Information Extraction (OpenIE):

- Pre-defined (Closed RE): We define a specific set of relation types (e.g.,

works_for,located_in,founded_by). This is common for knowledge graph construction. - OpenIE: The system extracts arbitrary relational tuples without a pre-defined schema, often using verb phrases (e.g., “Obama visited Kenya”). This is useful for discovering new relationships. This guide primarily focuses on closed (pre-defined) RE.

- Pre-defined (Closed RE): We define a specific set of relation types (e.g.,

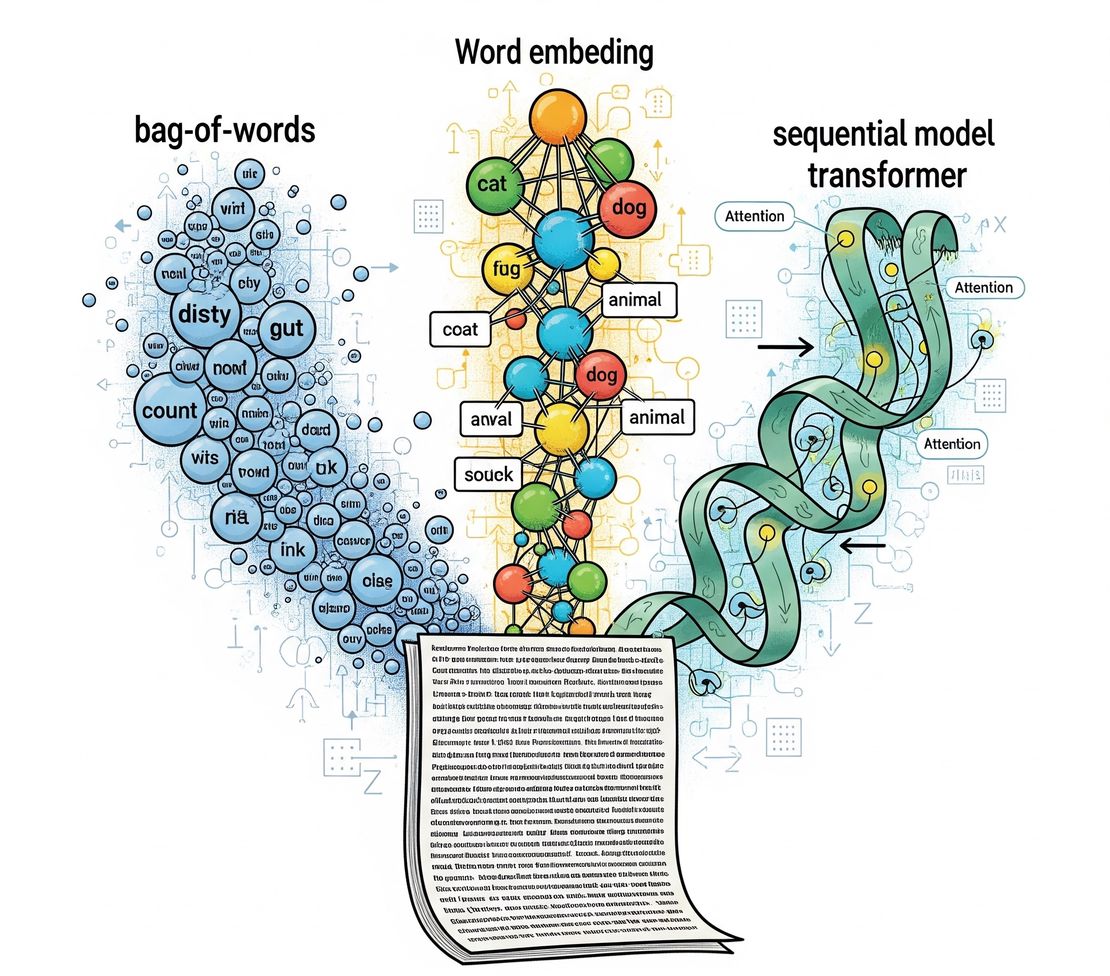

Leveraging LLMs for Relation Extraction: Key Approaches

The capabilities of pre-trained LLMs in understanding context and semantic nuances make them exceptionally well-suited for RE. We primarily use two paradigms: fine-tuning encoder-based LLMs and prompting generative LLMs.

Approach 1: Fine-tuning Encoder-based LLMs

This is the dominant approach for building high-performance, domain-specific RE systems, especially when a good amount of labeled data is available.

How it Works:

Entity Identification: First, NER is performed to identify the entities within the text. The RE task typically operates on pairs of identified entities.

Model Architecture:

- We start with a pre-trained encoder LLM (e.g., BERT, RoBERTa).

- The input to the model consists of the sentence with special tokens often used to mark the two entities for which we want to predict a relationship. For example:

[CLS] [E1]Apple[/E1] was founded by [E2]Steve Jobs[/E2] in Cupertino. [SEP] - The LLM processes this input, generating contextualized embeddings for each token.

- Classification Head: A classification layer (e.g., a softmax layer) is added on top. This layer takes the final contextualized embeddings (often the

[CLS]token’s embedding, or a concatenation/pooling of the entity embeddings) and predicts the relation type from a pre-defined set of categories (e.g.,FOUNDED_BY,LOCATED_IN,NO_RELATION).

Fine-tuning: The entire model (LLM + classification head) is fine-tuned on a labeled dataset where each entity pair is annotated with its corresponding relation type. The model learns to classify the relationship based on the context provided by the sentence and the marked entities. The Fine-Tuning Process Steps is as next:

- Select a Base Model: Choose a robust encoder model (e.g.,

bert-base-cased). - Add Special Tokens: The tokenizer must be configured to recognize the new entity markers (

<e1>,</e1>,<e2>,</e2>) so they are treated as distinct words. - Tokenize & Encode: Tokenize the marked sentences. The model’s final output will be a sequence of tokens, but for RE, a pooled output vector (often the vector corresponding to the

[CLS]token) is used for the final classification. - Define Model Head: Load the pre-trained model using a sequence classification class (e.g.,

AutoModelForSequenceClassification). This adds a classification layer that predicts one of your predefined relation types (e.g.,employed_by,lives_in,no_relation). - Training & Evaluation: Train the model using labeled data. Evaluation must use Macro-Averaged Precision, Recall, and F1-Score to ensure balanced performance across all relation classes, especially the

no_relationclass.

- Select a Base Model: Choose a robust encoder model (e.g.,

Why it’s Effective: LLMs excel at capturing the nuanced semantic interactions between words. By focusing the model on specific entity pairs (via special tokens or by using their pooled embeddings), we enable it to learn the subtle cues that indicate a particular relationship. The pre-training allows the model to leverage vast general language knowledge, even with relatively small, task-specific datasets.

Key Design Choices:

- Entity Masking/Marking: Using special tokens (

[E1],[/E1],[E2],[/E2]) or entity types as input to the model helps it focus on the entities of interest. Another technique involves masking out the entities and using only the surrounding context, or concatenating the entity embeddings directly. - Loss Function: Cross-entropy loss is standard for multi-class classification.

- Dealing with “No Relation”: A significant challenge is that most entity pairs in a sentence do not have a defined relationship. The model must learn to predict a

NO_RELATION(orOTHER) class, which often represents a large portion of the training data.

Approach 2: Prompting Generative LLMs (Zero-shot and Few-shot RE)

For tasks requiring flexibility, less training data, or rapid prototyping, generative LLMs (like GPT-3.5/4, Llama 2/3, Mistral) can be directly prompted without fine-tuning.

Zero-shot RE

Here, the model is given a natural language instruction to extract relations.

Example Zero-shot Prompt:

Extract all (entity1, relation, entity2) triples from the following text.

Consider relations like "founded by", "located in", "works for".

Return the output as a list of dictionaries.

Text: "Apple was founded by Steve Jobs in Cupertino, California. Tim Cook now leads the company."

Expected Output:

[

{"entity1": "Apple", "relation": "founded by", "entity2": "Steve Jobs"},

{"entity1": "Apple", "relation": "located in", "entity2": "Cupertino"},

{"entity1": "Apple", "relation": "located in", "entity2": "California"},

{"entity1": "Tim Cook", "relation": "leads", "entity2": "Apple"}

]

Pros: Highly flexible, no labeled data needed, can extract novel or user-defined relation types on the fly. Cons: Less consistent output format, potentially lower accuracy than fine-tuned models, higher inference cost and latency, and can struggle with complex sentences or subtle relations.

Few-shot RE

Providing examples in the prompt significantly improves the generative model’s performance and output consistency.

Example Few-shot Prompt:

Extract (subject, relation, object) triples from the text provided below.

The relations to extract are: "founded_by", "located_in", "employed_by".

Format the output as a JSON list of dictionaries.

Text: "Elon Musk works for Tesla in Austin."

Output:

[

{"subject": "Elon Musk", "relation": "employed_by", "object": "Tesla"},

{"subject": "Tesla", "relation": "located_in", "object": "Austin"}

]

---

Text: "Bill Gates co-founded Microsoft."

Output:

[

{"subject": "Bill Gates", "relation": "founded_by", "object": "Microsoft"}

]

---

Text: "Apple was founded by Steve Jobs in Cupertino, California. Tim Cook now leads the company."

Output:

Pros: Significantly improves accuracy and adherence to output format compared to zero-shot. Cons: Requires careful crafting of examples, increases token usage and cost, and still may not match the precision of fine-tuned models for highly specialized domains.

Prompting Process Steps:

- Define the task and format: Instruct the model on its role, the entities it should look for, and the desired relationship types.

- System Instruction: “You are a Relation Extractor. For the given text, find all (PERSON, ORG) pairs and classify the relationship into ’employed_by’, ‘founded’, or ’none’.”

- Specify output format: Insist on a structured output (e.g., JSON) for easy parsing.

- Example Output Schema:

[{"entity1": "...", "relation": "...", "entity2": "..."}]

- Example Output Schema:

- Submit input: Provide the raw, unmarked text.

- Few-Shot example (Optional): To increase accuracy and enforce a strict format, provide a few successful examples of text input and the corresponding structured output before the final query.

Fine-Tuning vs. Generative Prompting: Feature Comparison

| Feature | Fine-Tuning (Encoder Models) | Prompting (Generative LLMs) |

|---|---|---|

| Linguistic Structure | Classification over a fixed, marked input | Generation of structured text based on context |

| Accuracy | Generally higher (State-of-the-Art) | Good, but can struggle with complex entities or long text |

| Cost | High compute cost for training | Per-query API cost for inference |

| Adaptability | Requires retraining for new relation types | Instantly adapts to new relation types via prompt change |

Key Considerations for RE Projects

Multi-task Learning

A powerful variant involves training a single LLM to perform both NER and RE simultaneously. This allows the model to leverage shared linguistic features and improve performance on both tasks, especially when entities and relations are interdependent.

Practical Workflow and Evaluation

Implementing an RE system with LLMs typically involves these stages:

- Define Relation Types: Crucially define the semantic relationships you want to extract. Be clear about directionality (e.g.,

employed_byvs.employs). Also, define arguments (e.g.,PERSONemployed_byORGANIZATION). - Data Annotation:

- For Fine-tuning: Requires a dataset where sentences are annotated with entity spans AND the relations between specific entity pairs. This is significantly more complex and time-consuming than NER annotation. Tools like Doccano or Prodigy are suitable.

- For Prompting: While no annotation is strictly required for the target task, you might need a small set of examples to include in few-shot prompts.

- Preprocessing:

- Entity Linking/Coreference Resolution: For robust systems, you might need to resolve coreferences (e.g., “IBM” and “the company”) and link entities to a knowledge base (e.g.,

Apple->Q312in Wikidata). - Tokenization: Use the tokenizer consistent with your chosen LLM.

- Entity Linking/Coreference Resolution: For robust systems, you might need to resolve coreferences (e.g., “IBM” and “the company”) and link entities to a knowledge base (e.g.,

- Model Selection & Training:

- Fine-tuning: Choose an encoder LLM (e.g.,

bert-base-cased,roberta-large). Integrate with libraries like Hugging Facetransformers. - Prompting: Select a suitable generative LLM (e.g., OpenAI’s GPT models, open-source alternatives via Hugging Face API or local deployments).

- Fine-tuning: Choose an encoder LLM (e.g.,

- Evaluation:

- RE models are evaluated using Precision, Recall, and F1-score.

- A prediction is considered correct if:

- Both entities in the relation are correctly identified (or correctly linked to true entities).

- The relation type is correctly classified.

- The direction of the relation is correct (if applicable).

- Evaluation often happens at the instance level (per relation triple).

Advanced Challenges and Considerations

Relation Extraction remains a challenging task with several complexities:

- Data Scarcity: Creating high-quality, comprehensively labeled datasets for RE is labor-intensive and expensive, particularly for specific domains or rare relations. This is why few-shot and zero-shot approaches with generative LLMs are gaining traction.

- Long-Range Dependencies: When entities are far apart in a sentence or across sentences (inter-sentential RE), capturing their relationship is difficult due to the increased contextual distance. LLMs with larger context windows help, but it remains a hard problem.

- Overlapping Relations: A single entity might participate in multiple relations within the same sentence (e.g., “Obama, a former president, visited Kenya.” ->

Obama IS_A President,Obama VISITED Kenya). - Complex Relation Types: Some relationships are not simple binary connections but involve multiple entities or require complex inferencing (e.g., “The merger of Google and DeepMind resulted in a new AI division.”).

- Bias in Data: Training data can contain biases, leading the model to learn and perpetuate incorrect or prejudiced relationships. Careful data curation is essential.

- Explainability: Understanding why an LLM predicted a specific relation can be difficult. Techniques like attention visualization can offer some insights but are not a complete solution.

- Schema Evolution: In dynamic domains, new entity types and relation types might emerge. Fine-tuned models require re-training, while prompt-based methods offer more flexibility in adapting to new schemas.

- Imbalance (The

no_relationProblem): In any real-world corpus, the vast majority of entity pairs have no meaningful relation. This creates a severe class imbalance. Macro-averaging the F1-score is essential for evaluation, as it prevents the model from trivially achieving high accuracy by simply predictingno_relationmost of the time. - Data Quality: RE datasets are time-consuming to create. Ensure high inter-annotator agreement, as inconsistent or ambiguous labels will cripple model performance.

- Efficiency: Fine-tuning requires large memory (VRAM). Always start with smaller batch sizes and use efficient models like DistilBERT or TinyBERT if hardware is a constraint.

Conclusion

Relation Extraction is a pivotal NLP task for transforming unstructured text into structured knowledge. Pre-trained LLMs, whether through fine-tuning or sophisticated prompting, have dramatically advanced the state-of-the-art in RE. Researchers continue to push the boundaries, exploring new architectures, learning paradigms, and strategies to tackle the inherent complexities of semantic relationship understanding in diverse and evolving text data. The future of RE undoubtedly lies in even more intelligent, adaptable, and multimodal LLM-driven systems.